December 19, 2019

Case Study: Complete Recycling

Summary

The Complete Recycling Customer Portal is a business application that allows Complete Recycling’s customers to submit requests for service, then connects those requests to service providers. The Complete Recycling Customer Portal manages the lifecycle of the customer’s service requests, from scheduling to fulfillment and invoicing. The Complete Recycling Customer Portal also integrates with Complete Recycling’s accounting solution to sync invoices and other accounting transactions.

Problem

Complete Recycling’s legacy Customer Portal was initially developed in an old web framework which was no longer being developed. This made it difficult to add new features to the Customer Portal. Because it was difficult to add new features, there were manual processes that had been developed to run parts of the Complete Recycling business that could have been done by the Portal. Complete Recycling wanted to automate a lot of these manual steps with the new Customer Portal. Additionally, Complete Recycling was hosting the Customer Portal in their on-premise data center but wanted to move it to a cloud provider for a lower TCO and greater agility.

Solution

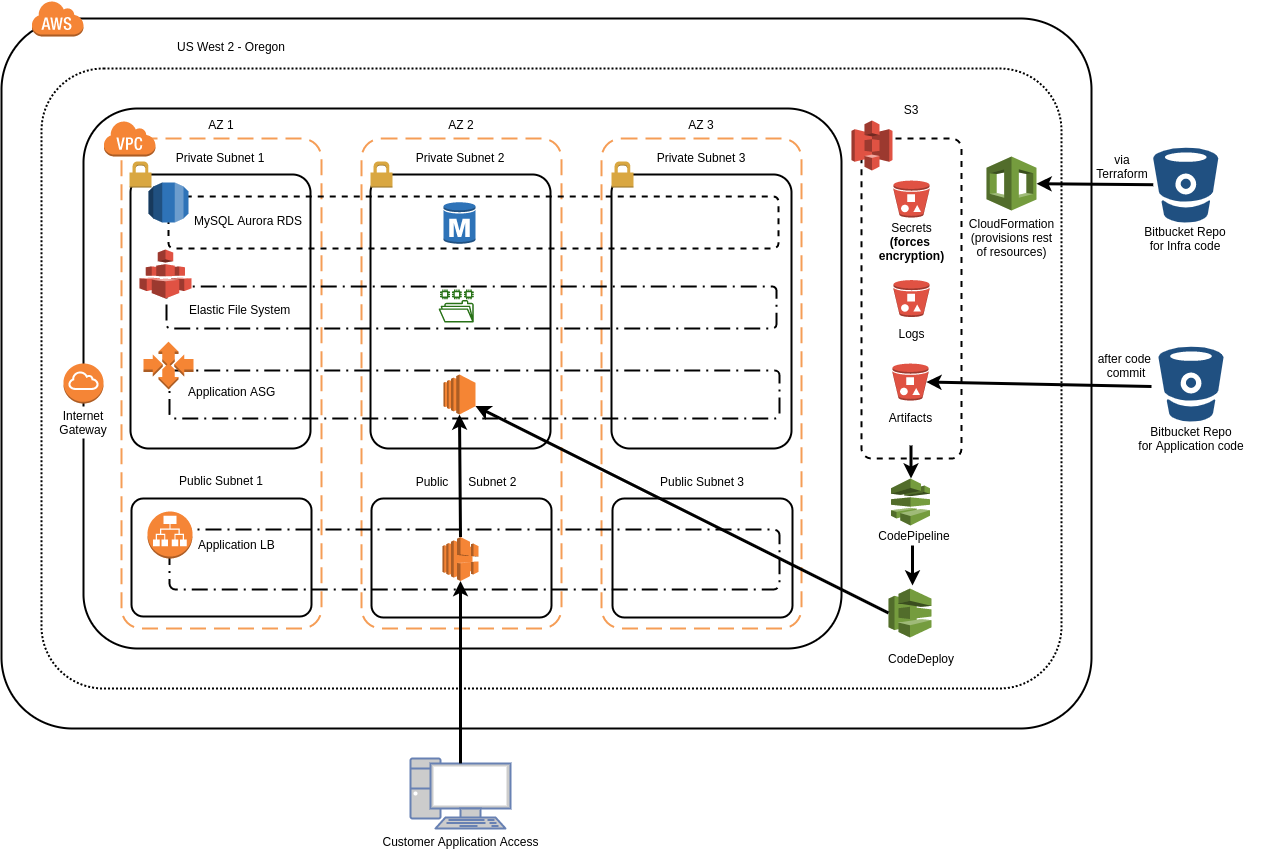

Ordinary Experts worked with Complete Recycling to re-implement their legacy Customer Portal as a Drupal 8 web application hosted on AWS instead of their on-premise data center. Ordinary Experts set up Complete Recycling with both a production and development AWS account. In both accounts the application is deployed to the us-west-2 region.

The updated Customer Portal is deployed with the use of Infrastructure as Code that makes the release of new infrastructure updates quick and reliable. Parameterized CloudFormation templates and Terraform modules provision the components of the Complete Recycling infrastructure, including the VPC and related networking, EC2 Auto Scaling Groups, MySQL Aurora RDS, ALB, EFS, CloudWatch Log Groups, and other related resources.

The CloudFormation also provisions a CodePipeline which listens to a S3 source and then runs CodeDeploy to deploy new application revisions. The CloudFormation templates are managed as part of a Terraform module. Terraform handles creating and updating the stacks and also passing values between stack outputs and parameters where needed. The Ordinary Experts AWS Static Website with CICD Terraform module uses a similar approach of wrapping CloudFormation templates in a Terraform module.

Instance configuration is done by Chef. To pass configuration information from CloudFormation to Chef, the non-secret configuration is stored in the CloudFormation Metadata and then retrieved by Chef by the chef-cfn library. Secrets are stored in an encrypted S3 bucket that is just for holding secret information and fetched using the Citadel Chef cookbook, which facilitates managing secrets in S3 with Chef.

The infrastructure code is stored in a git repository which follows the git flow branching methodology. The repository has a directory structure that defines a Terraform module with CloudFormation templates as well as instances of this module in specific AWS accounts.

An abbreviated listing of the structure of the infrastructure repository is as follows:

├── CHANGELOG.md

├── chef (contents omitted)

├── packer

│ ├── app.json

│ └── run-packer-with-profile.rb

├── README.md

└── terraform

├── accounts

│ ├── dev-1111111111111111

│ │ └── us-west-2

│ │ ├── common

│ │ │ └── main.tf

│ │ └── dev1

│ │ ├── main.tf

│ │ └── terraform.tfvars

│ └── prod-2222222222222222

│ └── us-west-2

│ ├── common

│ │ └── main.tf

│ └── prod1

│ ├── main.tf

│ └── terraform.tfvars

└── modules

└── cr_portal

├── application.yaml

├── cicd.yaml

├── efs.yaml

├── main.tf

├── pipeline_bucket.yaml

└── rds.yaml

In the ‘chef’ directory (contents omitted) are all the Chef cookbooks that are needed to configure the application. Any community cookbooks are vendored by Berkshelf.

These Chef cookbooks are provisioned into S3 via Terraform when ‘terraform apply’ is run. This will zip up all the chef cookbooks, upload them to S3, and compute an MD5 hash. It then supplies this MD5 hash as a parameter to the CloudFormation template, which in turn uses the MD5 hash value inside the UserData of the application LaunchConfiguration. This enables rapid local development by allowing the DevOps engineer to re-provision instances with new Chef code simply by running “terraform apply” on their local workstation.

In the UserData, first cfn-init is run which sets up CloudWatch logging for the instance. Then, the Chef zip file is downloaded from S3 and extracted. Then, chef-client is run in solo configuration which provisions the instance and then calls cfn-signal to signal back to CloudFormation about the success or failure of the provisioning.

Other infrastructure level changes, such as changing AMIs, are done with a rolling update via the Auto Scaling Group UpdatePolicy when initiated with a terraform apply. Terraform passes the updated parameters to the CloudFormation templates, and then CloudFormation updates the Auto Scaling Group / Launch Configurations, which triggers the replacement of the instances as configured with the AutoScalingRollingUpdate property. As mentioned, application deployments are done automatically by pushing a code bundle to the CodePipeline S3 Source location.

In this case, the team utilized Bitbucket pipelines to bundle application code and push to S3. For added reliability CloudWatch alarms monitor the services and send email alerts within specific condition sets to notify developers and platform administrators of critical conditions. Additionally CloudWatch Logs MetricFilters are provisioned with the CloudFormation which notifies the application team when application errors (i.e. status 500) are found in the logs.

AWS Services

- CloudFormation

- CloudFront

- CloudWatch Logs

- CodeBuild

- CodeDeploy

- CodePipeline

- EC2

- EFS

- RDS (MySQL Aurora)

- Route 53

3rd Party Services

- QuickBooks Online

- Bitbucket

Results

Through the launch of the re-developed Customer Portal and infrastructure automation Complete Recycling has been able to rapidly deploy environments for both production and preprod workloads. Ordinary Experts migrated Complete Recycling off of their on-premise data center for this application and integrated with their back-office solutions. Ordinary Experts continues to support Complete Recycling with the addition of new features and infrastructure updates.

Application Architecture